Overview

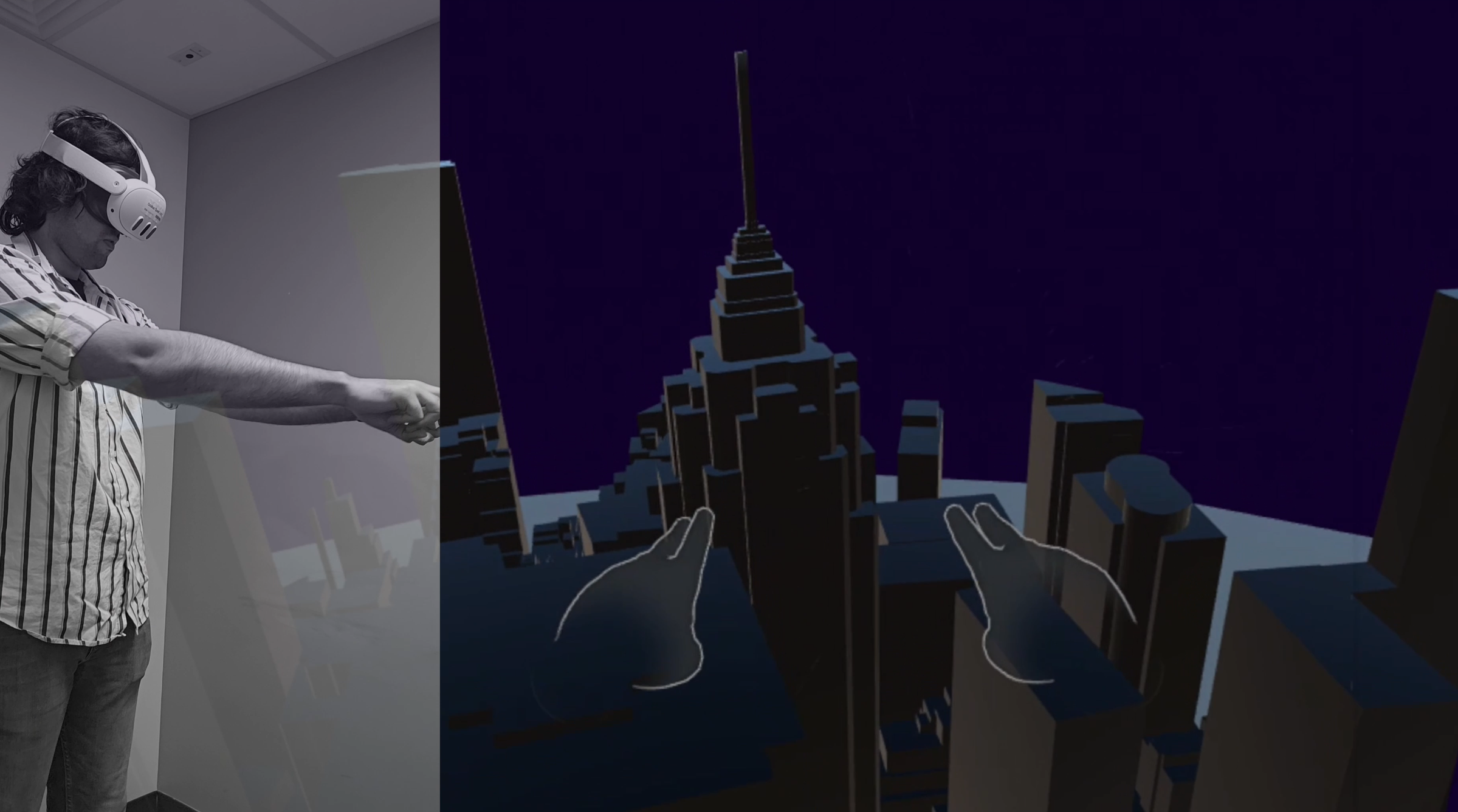

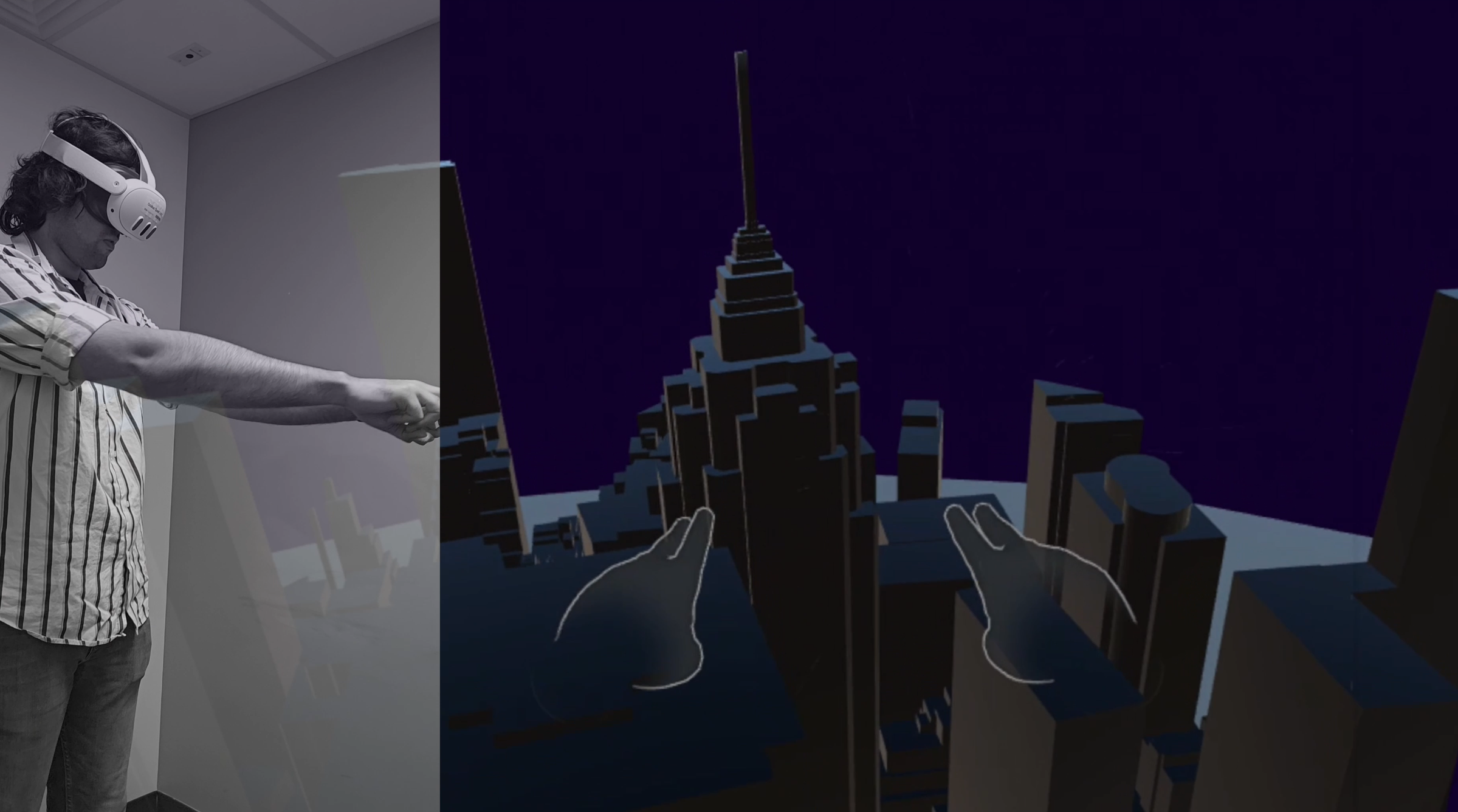

There is a noticeable lack of implementation of flight outside of aircrafts/airships in VR applications. The most popular implementations of six degrees of freedom in motion in VR applications always uses the controller’s while the gesture-based systems are only used in underwater environments that simulate swimming rather than flying. So I did a study of existing user interaction systems in VR that allow six degrees of locomotive freedom. The prototype is a fully realized gesture-based navigation system that allows users to access flight in virtual reality that is optimized for both intuitive controls and minimal vestibular disconnect.

Research Methods

Bodystorming

Played existing VR titles with 6DoF locomotion to identify motion, gesture, and comfort challenges.

Cognitive Walkthrough

Let users explore the locomotion system with minimal instruction; only explained usable buttons and observed how they discovered controls.

Observation of Existing Solutions

Reviewed VR locomotion research, gameplay videos, and developer talks to map common challenges and best practices.

Participant Observation & Interviews

Watched players interact with an existing flying implementation (Soar), recorded suggestions and behavioural patterns, and conducted short interviews for qualitative insights.

Key Research Findings

Motion Sickness as a Barrier

Most people I approached for testing had previous experiences with VR and the most common reason for not trying the game was their previous experiences with VR causing motion sickness or headaches. Motion especially in mid-air with no vestibular feedback can contribute more to motion sickness than in grounded locomotion systems.

Fast Learning Curve

The people who tried the game were quick to understand the controls and got comfortable with the input system within the first few minutes of playtime. The experience of just the movement system was enough to keep people engaged for a long time.

Positive Engagement

The general feedback I received was that it was fun and well implemented. The general feedback was that the motion was smooth and well implemented.

Input Mapping Issues

Some user’s struggled with the input as the flight system was mapped to both the grip buttons on the controller being pressed to enable flight.

“Superman” Mental Model

The flying itself felt natural and intuitive once I gave them a keyword, ‘Superman’. With my interviews I felt like everybody has a very clear picture of superhuman flight capabilities, especially since all the visual representations of flying in fictional video content, films and video games looks exactly the same. This game is a very well implemented system of showing if a human could fly without wings, what would that feel like. That’s an observation from my own experience as well as the user’s I tested with.

Expectation of Mid-Air Turning

A key insight one of the user’s pointed out was the lack of turning based on user inputs that reduces immersion. In a grounded VR game, the act of turning would typically require the user to turn in the real world to face another direction, because the ground in the VR world and the real world is implemented to be the exact same. The user was expecting to turn in mid-air without turning in real life based on the flying input system and described it as how a bicycle would turn if we rotate the handles. That does not happen in VR as the user has to always physically turn to face a different direction but while flying, the user expected the flight to implement rotating capabilities at some level.

Limited Experience with Hand-Tracking

Most users did not have sufficient experience with hand tracking based input systems in VR even though the technology has come very far and there are far fewer limitations. While in competitive/high difficulty games, hand tracking can be slow and unreliable, in story-driven games and experiences, hand-tracking can be leveraged as a powerful input system.

Identified Challenges

Vestibular Disconnect

No vestibular feedback causes two problems: Lack of immersion as well as motion sickness.

Locomotion System Integration

- With a flying experience, players can lose reference of origin since they are not on the ground.

- Implementing flight mechanics into an intuitive hand tracked or gesture-based input system is complex.

- Design challenge for game designers because the freedom in movement means more complex game worlds because users are not limited to a ground plane.

Hardware Limitations

Limitations of ML camera-based hand tracking since the hands can only be tracked when they are in the field of view of the camera.

Process

- Literature review of existing VR locomotion methods and user pain points.

- Conceptualizing gesture-based controls mapping physical hand movements to 6DOF flight.

- Implementing the prototype in Unity using advanced hand tracking SDKs.

- Conducting user testing to evaluate motion sickness and intuitive navigation.

- Iterative refinement of the control algorithms based on user feedback.

Additional Info

The project focused on reducing "VR sickness" while maintaining the sense of freedom that 6DOF flight provides. By mapping acceleration and direction to intuitive hand shapes and orientations, we achieved a significantly higher proficiency rate compared to standard joystick-based flight in initial user trials.

Future Plans

- The interaction system is fun to use according to user feedback and could be implemented in VR applications that require movement with 6DoF and do not want to use controllers.

- I developed this based on my own physicality so the thresholds do not work the same for everyone and different arm lengths result in unpredictable behaviour. The distance thresholds could be normalized and made dynamic to tackle this issue.

- More user research should be conducted on how VR applications could implement smooth turning using spatial references and cause minimal vestibular disconnect.